Newsletter Subscribe

Enter your email address below and subscribe to our newsletter

NVIDIA has made several key announcements at the recent GTC (GPU Technology Conference). Firstly, NVIDIA officially launched its next-generation AI GPU—the Blackwell B100. However, the keynote emphasized the entire Blackwell platform instead of focusing solely on the B100 graphics card.

During the introduction, Jensen Huang, CEO of NVIDIA, highlighted that this is a massive GPU that can be used to build an enormous computer cluster or a supercomputer. Jensen Huang introduced the Blackwell Die, which usually refers to an unpackaged chip in the semiconductor industry, also known as a bare die. The Blackwell Die can be considered the core of the B100 graphics card, containing approximately 104 billion transistors manufactured using TSMC’s 4nm enhanced process technology. The B200 is essentially a combination of two Blackwell B100 bare dies packaged together, similar to the technology used by Apple in their M2 Ultra. However, NVIDIA’s data transfer rate is significantly faster than Apple’s. Regarding transistor count, the B100 has increased by approximately 25% compared to the H100. As for the B200, since it combines two B100 bare dies, its transistor count is 2.5 times that of the H100.

Before comparing the computational capabilities of the B200 and H100, it is essential to understand the FP4 and FP8 formats. Both FP4 and FP8 are floating-point formats used to represent decimal numbers. FP4 uses 4 bits to represent a floating-point number, while FP8 uses 8 bits, resulting in higher precision for FP8.

Jensen Huang emphasized the significantly enhanced computational capabilities of the B200 GPU, reaching 20 Peta Flops (PFLOPS), which is five times the 4 PFLOPS of the H100. However, Jensen Huang’s comparison is slightly misleading because the 20 PFLOPS of the B200 is based on the FP4 format, while the 4 PFLOPS of the H100 is based on the FP8 format. Since FP4 has lower precision than FP8, directly comparing their PFLOPS is not entirely fair. For a fair comparison, if both GPUs’ performance is measured using the FP8 standard, the actual performance of the B200 GPU would be 10 PFLOPS, while the H100 is 4 PFLOPS. If considering only the B100, it would be 5-7 PFLOPS.

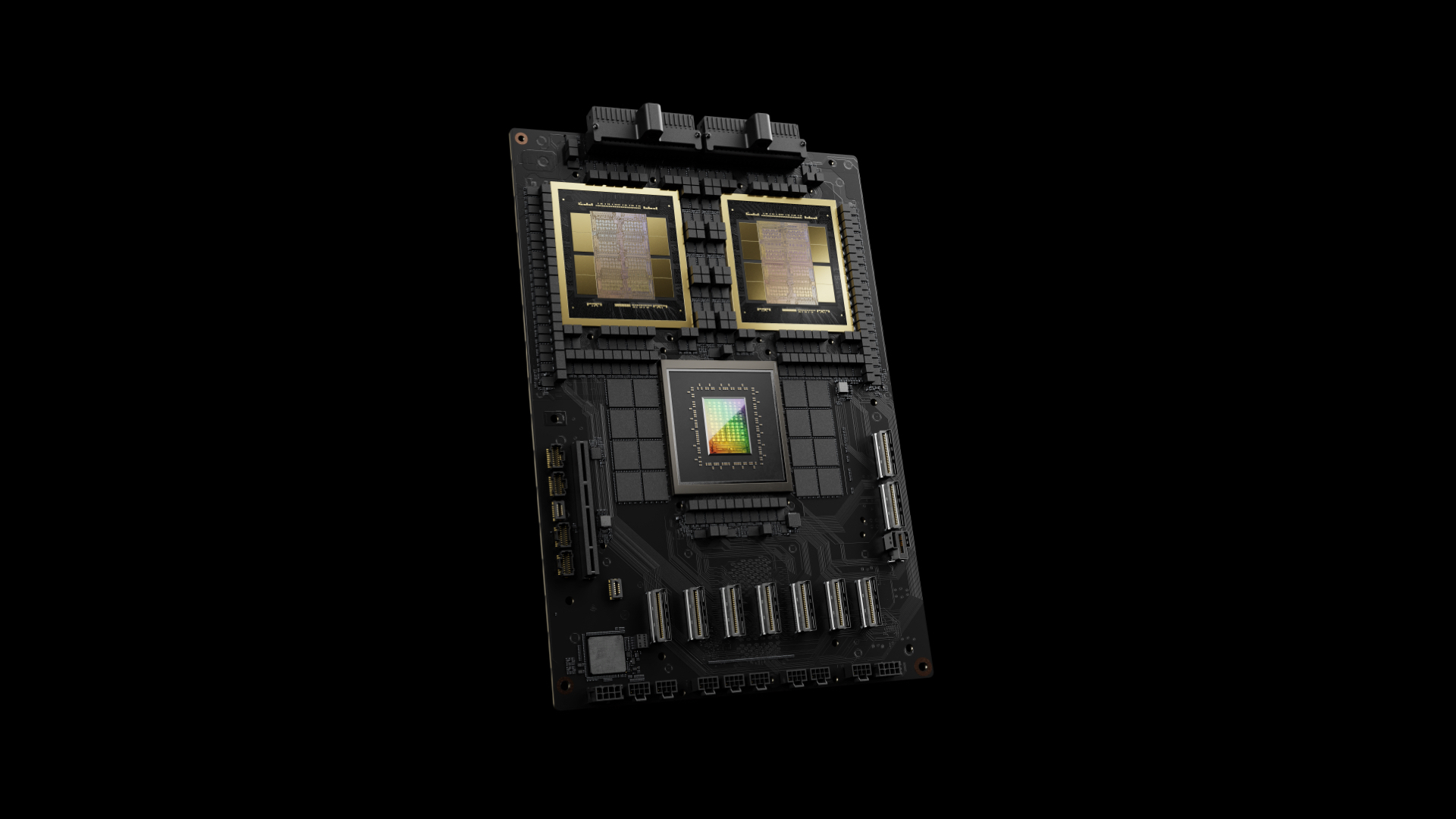

Jensen Huang’s next focus was on the GB200 Superchip, which is not an entirely new concept but an upgraded version of the previous GH200 Superchip. The GH200 Superchip consists of one NVIDIA Grace CPU and two H100 GPUs, while the GB200 Superchip has undergone significant upgrades. This new super chip combines one Grace CPU and two Blackwell B200 GPUs, connected using NVLink technology. The new Superchips boasts up to 864 GB of High Bandwidth Memory (HBM), an additional 480 GB compared to the total memory of 384 GB from the two B200 GPUs.

NVIDIA introduced the Blackwell Compute Node, a server that houses two GB200 Superchips, equivalent to four B200 GPUs and two Grace CPUs. This configuration offers higher compute density than NVIDIA’s previous generation HGX server. The old HGX server typically had eight GPUs and a size of 8U, while the Blackwell Compute Node has been significantly reduced to 1U or 2U.

NVIDIA’s recent release is the GB200 NVL72, a rack-based unit where “NVL” represents NVIDIA’s high-speed data transfer technology, NVLink. In a single NVL72 rack, 18 Blackwell Compute Nodes are equipped, each containing 4 B200 GPUs. As a result, the entire rack will have 72 B200 GPUs. This is a substantial improvement compared to NVIDIA’s previous generation H100 rack, where an 8U rack could accommodate a maximum of 4 servers with 32 GPUs.

NVIDIA introduced the second-generation computation engine, Transformer Engine 2, optimized for large language models and Transformer architectures. This technology enables the GPU to automatically select the FP8 or FP4 computation format based on the computational requirements, enhancing the flexibility and efficiency of calculations. If a part of a model can be computed using FP4 without losing accuracy, the system automatically switches to FP4 to accelerate the computation speed.

According to Jensen Huang, training a 1.8 billion parameter GPT (Generative Pre-trained Transformer) model using the old H100 GPU required 8,000 H100s, took 90 days, and consumed 15 MW of power. With NVIDIA’s latest Blackwell architecture and GB200, the same training task only requires 2,000 GPUs and can be completed within the same 90-day period, but with a significantly reduced power consumption of 4 MW. Both the number of required GPUs and power consumption are only one-fourth of the previous requirements.

Apart from Jensen Huang’s keynote, there were other exciting developments. NVIDIA recently launched a new software service called NIM (NVIDIA Inference Microservice). NIM allows for running a container directly on the GPU, encapsulating all the necessary components for AI execution and eliminating the need for manual setup and installation. Installing the NIM Microservice on an older GPU can be used to run AI models and provide the required services, with a microservice architecture for communication between them.

NVIDIA has been a significant player in the field of robotics. During his presentation, Jensen Huang showcased a series of humanoid robots, including two small and adorable Disney robots, which surprised many people unaware of NVIDIA’s involvement in robotics research and development. One of the highlights in the robotics domain at this GTC conference was the update of numerous features in NVIDIA’s robot development platform, Isaac. In addition to the Isaac platform, NVIDIA introduced a new robotic foundation or base model called GR00T.

GR00T is not a physical robot but rather the “brain” of a robot, with two main functions: understanding human language and imitating human actions through learning. Many people find GR00T impressive, but it is more of a “generic model.” NVIDIA trains this foundation model, allowing all manufacturers interested in developing humanoid robots to use it and then fine-tune it for commercialization or application in actual products. GR00T’s positioning differs from Optimus, primarily serving as a foundation model for other manufacturers. As an analogy, GR00T’s situation may be similar to Meta’s Llama 2, which has sufficient capabilities to assist robot manufacturers who cannot develop their brains by providing a generic model for development.